Essential Work

Analyzing the Hiring Technologies of Large Hourly Employers

Aaron Rieke, Urmila Janardan, Mingwei Hsu, and Natasha Duarte

ReportExecutive Summary

Most workers in the United States depend on hourly wages to support themselves and their families. To apply for these jobs, especially at the entry level, job seekers commonly fill out online applications. Online applications for hourly work can be daunting and inscrutable. Candidates — many of whom are young people, people of color, and people with disabilities — may end up filling out dozens of applications, while receiving no responses from employers.

This report provides new empirical research about the technologies that applicants for low-wage hourly jobs encounter each day. We submitted online applications to 15 large, hourly employers in the Washington, D.C. metro area, scrutinizing each process. We observed a blend of algorithmic hiring systems and traditional selection procedures. Many employers used an Applicant Tracking System to administer a range of selection procedures, including screening questions and psychometric tests. We augmented this research with expert interviews, legal research, and a review of industry white papers to offer a more comprehensive analysis.

We offer the following findings and related policy recommendations:

1. It is simply impossible to fully assess employers’ digital hiring practices from the outside. Even the most careful research has limits. It is critical that regulators, employers, vendors, and others proactively assess their hiring selection procedures to ensure that all applicants are treated fairly.

2. The current U.S. legal framework is woefully insufficient to protect applicants. Federal oversight is far too passive, and employers lack incentives to critically evaluate their hiring processes. Regulators must be more proactive in their research and investigations, and modernize guidelines on the discriminatory effects of hiring selection procedures.

3. Major employers are using traditional selection procedures at scale — including troubling personality tests — even as they adopt new hiring technologies. Some test questions lacked any apparent connection to the essential functions of the jobs for which we applied, and they raised a range of discrimination concerns. Employers should seek to measure essential job skills, and discontinue the use of assessments that fail to do so.

4. Employers rarely give candidates meaningful feedback during the application process. In our analysis, we received minimal feedback from employers — about the purpose of selection procedures, details of reasonable accommodations, or the final disposition of our applications. Employers can and should be required to offer more, so applicants can improve their prospects and vindicate their legal rights.

Introduction

Most workers in the United States depend on hourly wages to support themselves and their families. To apply for these jobs, especially at the entry level, job seekers commonly fill out online applications. These applications can be daunting and inscrutable. Candidates — many of whom are young people, people of color, and people with disabilities — may end up filling out dozens of applications, while receiving no responses and be left wondering why. Meanwhile, decades of research shows that employers tend to discriminate against women, people of color, and people with disabilities, and a recent meta-analysis suggests that little has improved over the past 25 years.

Employers have long used digital technologies to manage their hiring processes, and many are now adopting new predictive hiring tools. Our prior research has found that while these technologies rarely make affirmative hiring decisions, they often automate rejections. Much of this automation happens early in the hiring process, when applicants are deemed not to meet an employer’s preferences. We concluded that “without active measures to mitigate them, bias will arise in predictive hiring tools by default.”

Over the last few years, policymakers have devoted much needed attention to hiring assessment technologies, especially those that rely on facial analysis or other types of machine learning. Civil rights advocates have urged more legal accountability and oversight by regulators to help prevent discrimination. Congress has also begun paying attention, holding a recent hearing on protecting workers’ civil rights in the digital age, and questioning regulators about their capacity to oversee employers’ practices.

To help advance these conversations, this report provides new empirical research about the technologies that applicants for low-wage hourly jobs actually encounter each day. We submitted online applications to 15 large, hourly employers in the Washington, D.C. metro area. We observed a blend of algorithmic hiring systems and traditional selection procedures. Many employers used an Applicant Tracking System to automatically conduct a range of selection procedures, including screening questions and psychometric tests. Although we were able to see all the steps that would be visible to an applicant, we were not able to observe how employers rank or score applicants based on these inputs.

Based on these experiments, together with a review of industry white papers, legal research, and a range of expert interviews, our analysis emphasizes the need for more proactive regulation and guidance by federal authorities. We call on employers to discontinue selection procedures that do not measure essential job skills, and highlight the importance of giving applicants more information during and after the application process.

This report proceeds as follows. In Part II, we describe the scope, methodology, and limitations of our research. In Part III, we summarize our empirical findings about various online application processes from the point of view of the applicant. In Part IV, we offer a critical analysis of our observations, while drawing from other research and advocacy. In Part V, we provide a brief overview of relevant civil rights law in the United States, and highlight major gaps in Part VI. In Part VII we offer recommendations for employers and policymakers. Part VIII concludes.

Scope and Methods

This Section defines the scope of the research and describes our methodology and its limitations.

A. Scope

We set out to analyze a sample of the selection procedures that large hourly employers use to choose candidates to interview for entry-level jobs in the United States. We define “selection procedure” as any process used as a basis for a hiring decision. We define “large hourly employer” as an employer that has more than 200,000 employees in the United States. In selecting employers for study, we prioritized national hourly employers with online application processes for entry-level jobs in and around Washington, D.C. In practice, all the applications were hosted on national websites. We did not see any evidence that the applications or hiring processes were tailored to particular states or locales.

B. Methodology and Limitations

We submitted a single application to each of 15 large hourly employers between February 2020 and March 2021. For each employer, we applied for an entry-level position — including cashier, retail, or warehouse — using an author’s legal name, birth date, and Social Security Number (if requested). We submitted a generic resume and cover letter to each employer, indicating a limited retail and cashier experience. We recorded each step of each application process and captured the incoming and outgoing web traffic. We followed up with research including reviewing vendor white papers and marketing materials, and expert interviews.

| Company | Date |

|---|---|

| Walmart | January 29, 2020 |

| Home Depot | January 30, 2020 |

| Kroger | January 30, 2020 |

| Target | January 31, 2020 |

| Starbucks | February 3, 2020 |

| Walgreens | February 12, 2020 |

| UPS | February 12, 2020 |

| Amazon | February 12, 2020 |

| CVS | February 12, 2020 |

| FedEx | February 13, 2020 |

| McDonald’s | February 21, 2020 |

| TJ Maxx | March 2, 2021 |

| Costco | March 3, 2021 |

| Lowe’s | March 3, 2021 |

| 7-Eleven | March 3, 2021 |

Applications submitted to hourly employer by date

This methodology provided a valuable, but ultimately limited, window into these employers’ hiring processes. In some cases, we were able to identify software programs and third-party vendors involved in the application process — either through visual branding or through the web traffic we observed. Although we were able to gather significant information about the selection procedures that involved applicant input, we were unable to identify how employers used these inputs once they were received (e.g., whether and how automatic screening, ranking, or scoring processes were used in making a final hiring decision).

Online Applications, Step by Step

In this section, we summarize the application processes we encountered, from the point of view of the applicant. A more analytical critique follows in Section IV. The typical steps of an online application, which do not always occur in this order, include:

Background Information and Consent: Applicants are asked for basic identifying information like name and address, and agree to basic terms and conditions (e.g, consenting to a background check).

Screening Questions: Applicants are asked whether they meet an employer’s minimum requirements for employment, including work authorization, and for additional requirements or preferences for criteria such as location, schedule, salary, and experience.

Work Experience and Other Qualifications: Applicants are asked to provide information on their work experience and education through a form or by uploading a resume.

Demographic Information: Applicants are asked to provide their gender, race, disability status, whether they have received public benefits (e.g., SNAP), and their veteran status.

Pre-Employment Tests: Applicants are asked to complete standardized tests designed to assess skills, personality, or other characteristics.

Interviews: Applicants are asked to participate in structured conversations to gauge their potential performance at a job. (We did not schedule or participate in interviews in our study.)

Final Steps: For applicants that make it this far, there are additional steps in the hiring process beyond the interview, potentially including follow-up interviews, further assessments, and finally a job offer. These steps are outside the scope of this paper but may rely on hiring technologies.

Today, virtually all large employers use an Applicant Tracking System (ATS) as the backbone of their overall hiring process. ATSs are multipurpose software platforms that help employers create applications, collect applicants’ information, administer assessments, generate analytics, and comply with various laws. The largest ATS vendors by market share include Taleo, IBM Kenexa, and Workday. In our research, we encountered ATSs from each of these companies.

Employers have significant flexibility when choosing how to configure an ATS. They select features provided by a wide range of vendors, including background checks, resume screening, candidate ranking, and assessment tests. For example, by default, Workday’s ATS offers employers many common features, including resume parsing, social media integrations, and interview scheduling. However, employers can choose to swap out these default features through Workday’s partnerships with third-party vendors. Accordingly, ATSs cannot be very usefully analyzed in the abstract. Rather, they must be evaluated as configured by each employer.

A. Background Information and Consent

Applicants begin the hiring process by creating an account with the employer’s ATS, and provide basic information including legal name and address. In two applications, we were also asked for a full SSN, while two other applications asked for the last four digits. Some applications required us to agree to a number of terms and conditions, which covered consenting to a background check (six of 15 applications) or drug test (11 of 15 applications), or enrolling in a recruiting text message program (five of 15 applications).

B. Screening Questions

Next, applicants typically answer screening questions that verify whether they meet the minimum standards of eligibility for work. Employers asked a variety of questions, but all asked about legal authorization to work, age, and schedule availability. It was often unclear whether the questions about availability were asking for preferences or requirements.

All but one employer asked questions about legal authorization to work. For example, Walmart asked, “If hired, can you submit documentation verifying your identity and legal right to work in the U.S?” Three employers asked if we would need future H1B sponsorship. We expect that candidates without legal authorization to work would be automatically rejected. It is less clear whether those who need future H1B sponsorship would also be rejected.

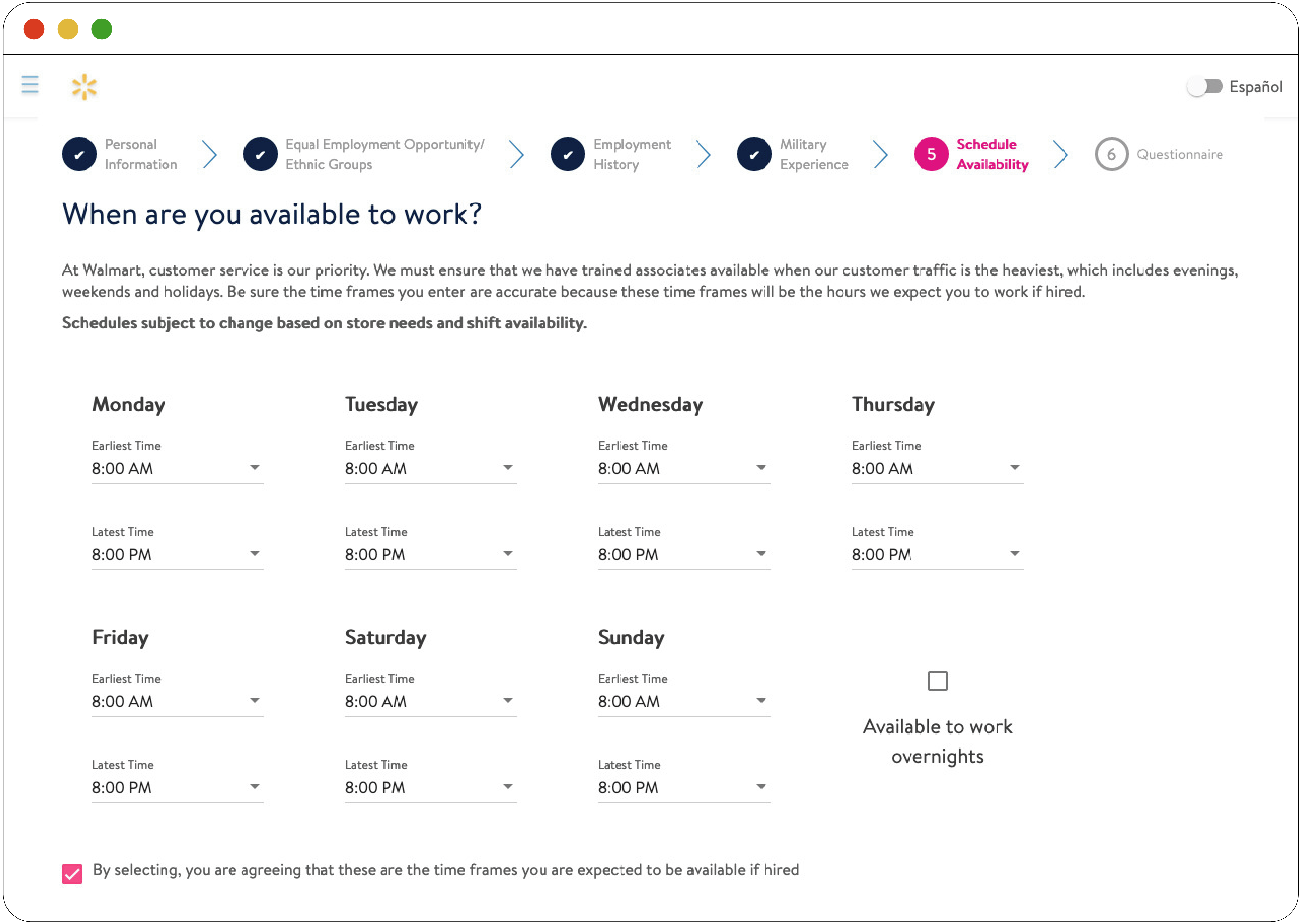

Almost all employers asked about our schedule and availability. For example, Walmart asked for the days of the week we were available and the earliest and latest time we could work on those days. We were also asked if we could work overnight. At the bottom of the page, we were required to check a box that stated, “By selecting, you are agreeing that these are the time frames you are expected to be available if hired.”

Figure 1: Walmart's Scheduling

This figure shows a screenshot of Walmart’s scheduling questions for candidates, with options to select the earliest and latest time an applicant is available. There is also a checkbox to indicate availability to work overnight.

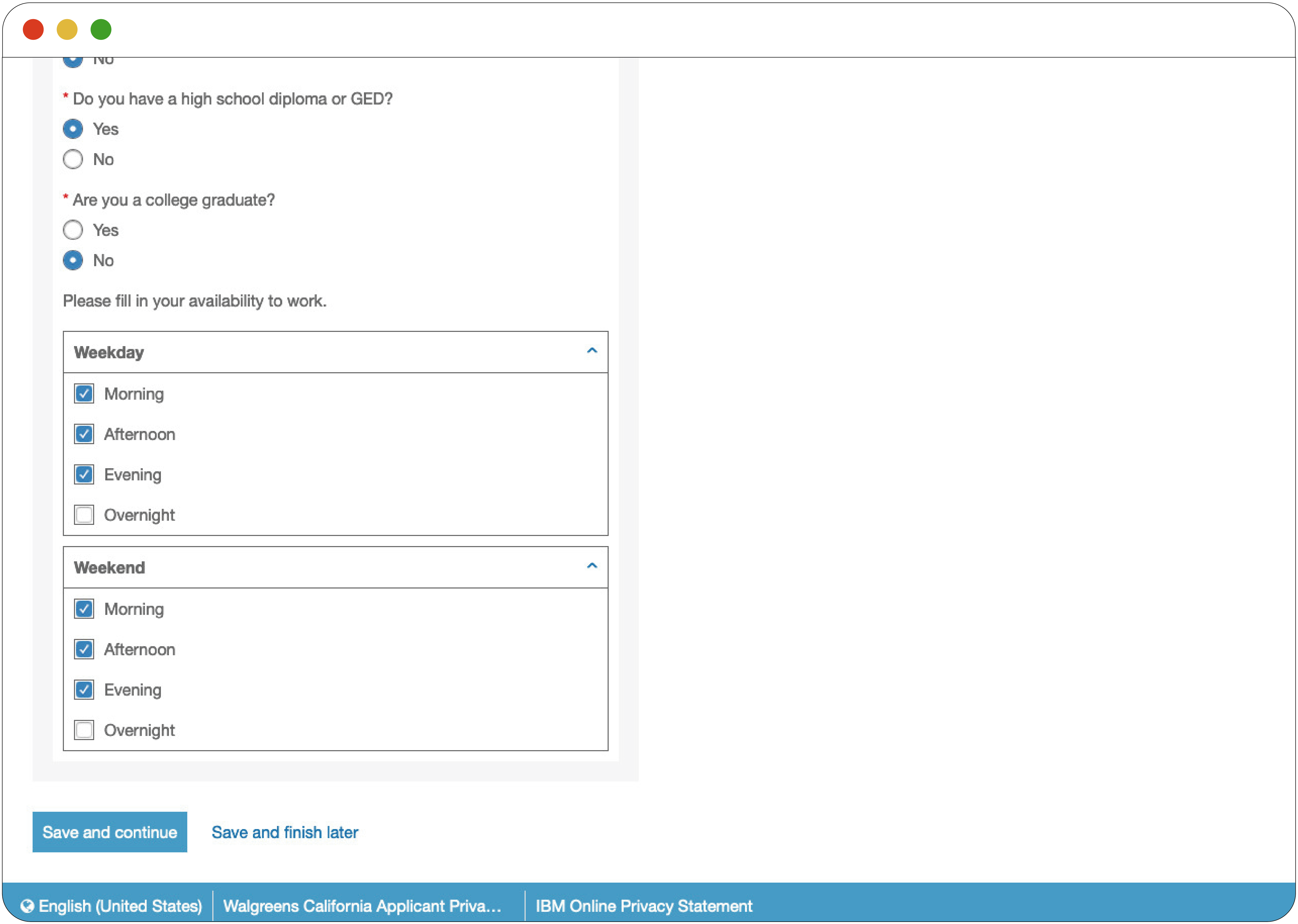

Other employers asked for availability in broad categories, asked how many hours we were willing to work each week, or offered specific shifts that we could apply for.

Figure 2: Walmart's Scheduling

This figure shows a screenshot of Walgreens’ scheduling questions, with checkboxes to indicate availability in the morning, afternoon, evening, or overnight on either weekdays or weekends.

Three employers — CVS, FedEx, and TJ Maxx — asked us for our desired pay. CVS asked for an annual base rate, which it described as the hourly rate multiplied by 2,080. FedEx asked us to provide desired pay in terms of an annual base rate or an hourly rate. We were required to answer these questions and were given no indication of what pay we might actually receive.

Two applications asked questions about our physical ability. Starbucks’s application asked:

Are you physically able to perform a job that requires you to complete many different physical tasks, such as standing, walking, twisting, and lifting objects up to 40 pounds, in a fast paced environment? This includes maintaining store cleanliness and preparing food and beverage products to customer satisfaction, with or without accommodation?

UPS’s application asked:

An essential function of this job requires that you continuously lift and lower packages that may weigh up to 70 pounds. To do this, you must be able to frequently adjust your body position to bend, stoop, stand, walk, turn and pivot. In addition, you must work in an environment with variable temperatures and must be able to read words and numbers. Can you perform these essential functions with or without reasonable accommodation?

Employers asked for a variety of other information: education level, student status, willingness to submit to a drug test, English fluency, and — in two instances — reliable transportation to a work site. Again, it was not clear which questions could result in an automatic rejection.

C. Work Experience and Other Qualifications

Next, applicants are asked to provide information about their work histories, skills, and education. Often, applicants are asked to upload their resume for automated parsing. This step was typically presented to us as a time-saving convenience. For example, FedEx stated that “uploading a resume will minimize the time required to build your profile,” and on the next page gave us the option to “pre-fill” basic information like name, address, and work experience based on the uploaded resume. We were told we could reenter the information, presumably for data quality and verification purposes.

In our experiments, 14 applications asked for previous work experience or a resume. Ten applications allowed us to upload our resumes and cover letters as PDFs or Word documents, while others required us to input our work experience into an online form. Some applications allowed both. Two applications also asked us to select skills we possessed from a drop-down menu of hundreds of choices.

D. Demographic Information

While 13 out of 15 employers asked us to voluntarily provide our gender and race, only six employers asked us to voluntarily disclose our disability status. Each of these self-identification forms were clearly labeled as “voluntary” for applicants, and disclosure was not required to complete the application. Nine employers offered a phone number or email to contact should a candidate require accommodations. Eleven employers also asked for military and veteran status. Each of these employers noted that responding to the questions was voluntary and that they collected such information to comply with federal regulations. Ten employers also provided a Work Opportunity Tax Credit (WOTC) questionnaire, asking whether we had ever received federal aid.

E. Pre-Employment Tests

Next, applicants might be asked to take an array of pre-employment tests. Five of the employers to which we applied — Amazon, CVS, Home Depot, Walgreens, and Walmart — included pre-employment tests as a part of their online application processes. These tests are designed to measure skills, personality, or other characteristics.

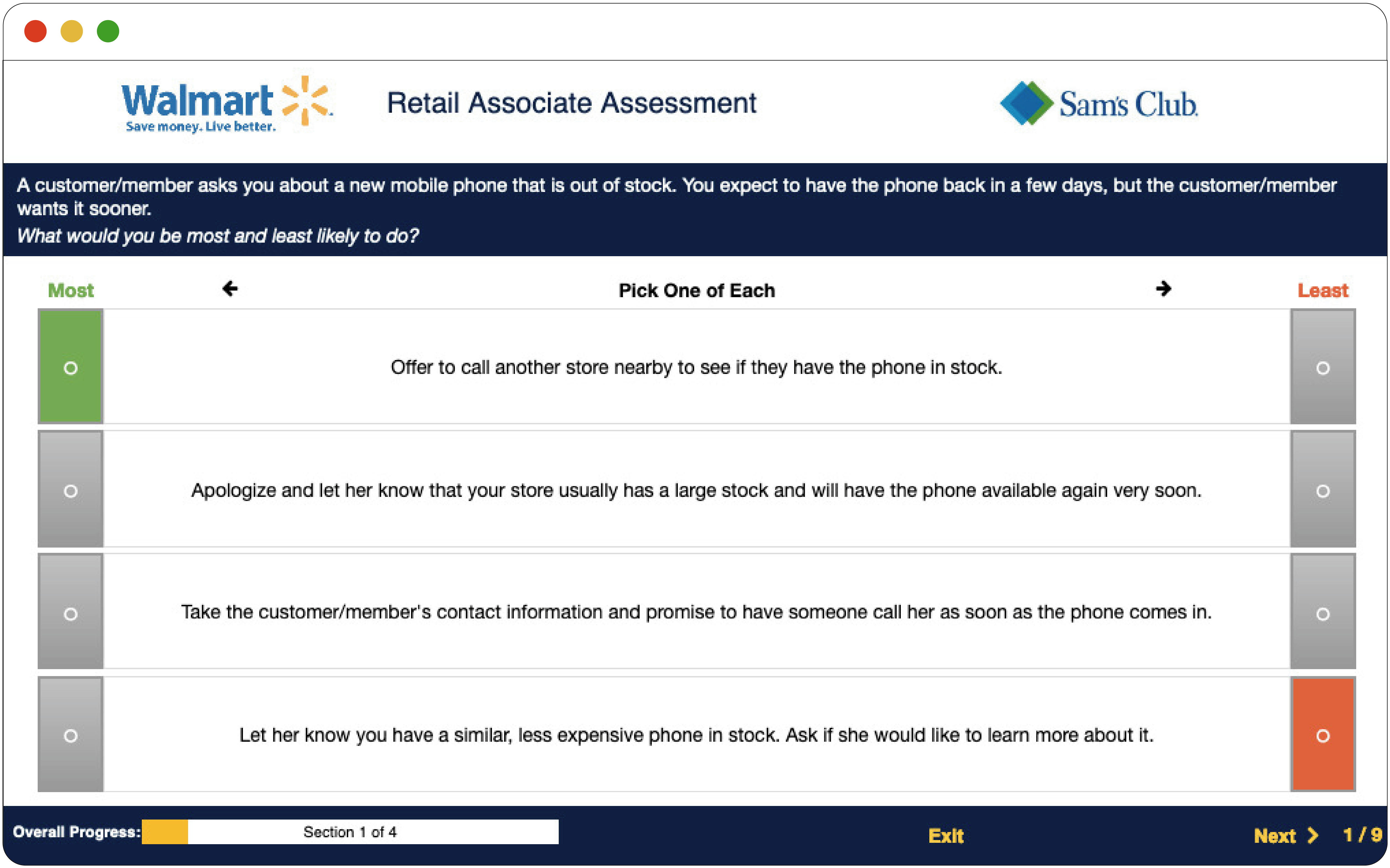

Walmart and CVS administered situational judgment tests meant to identify how we would respond to a work problem or critical situation related to the job. Walmart informed us that we would be presented “with a variety of situations that you could encounter as an associate. Then you’ll tell us how you’d respond to each scenario.” We were presented with a range of options, and prompted to indicate which we were most and least likely to take. We saw questions ranging from how to respond to a customer who asks for help to how to deal with coworkers who aren’t performing well. A consistent theme across potential answers was asking for guidance or action from a supervisor, or trying to address the situation on one’s own.

Figure 3: Walmart Situational Judgment Test

This figure shows a question from Walmart’s situational judgement test. It asks, “A customer/member asks you about a new mobile phone that is out of stock. You expect to have the phone back in a few days, but the customer/member wants it sooner. What would you be most and least likely to do?” There are four options for candidates to select from: 1) Offer to call another store nearby to see if they have the phone in stock; 2) Apologize and let her know that your store usually has a large stock and will have the phone available again very soon; 3) take the customer/member’s contact information and promise to have someone call her as soon as the phone comes in; 4) Let her know that you have a similar, less expensive phone in stock. Ask if she would like to learn more about it.

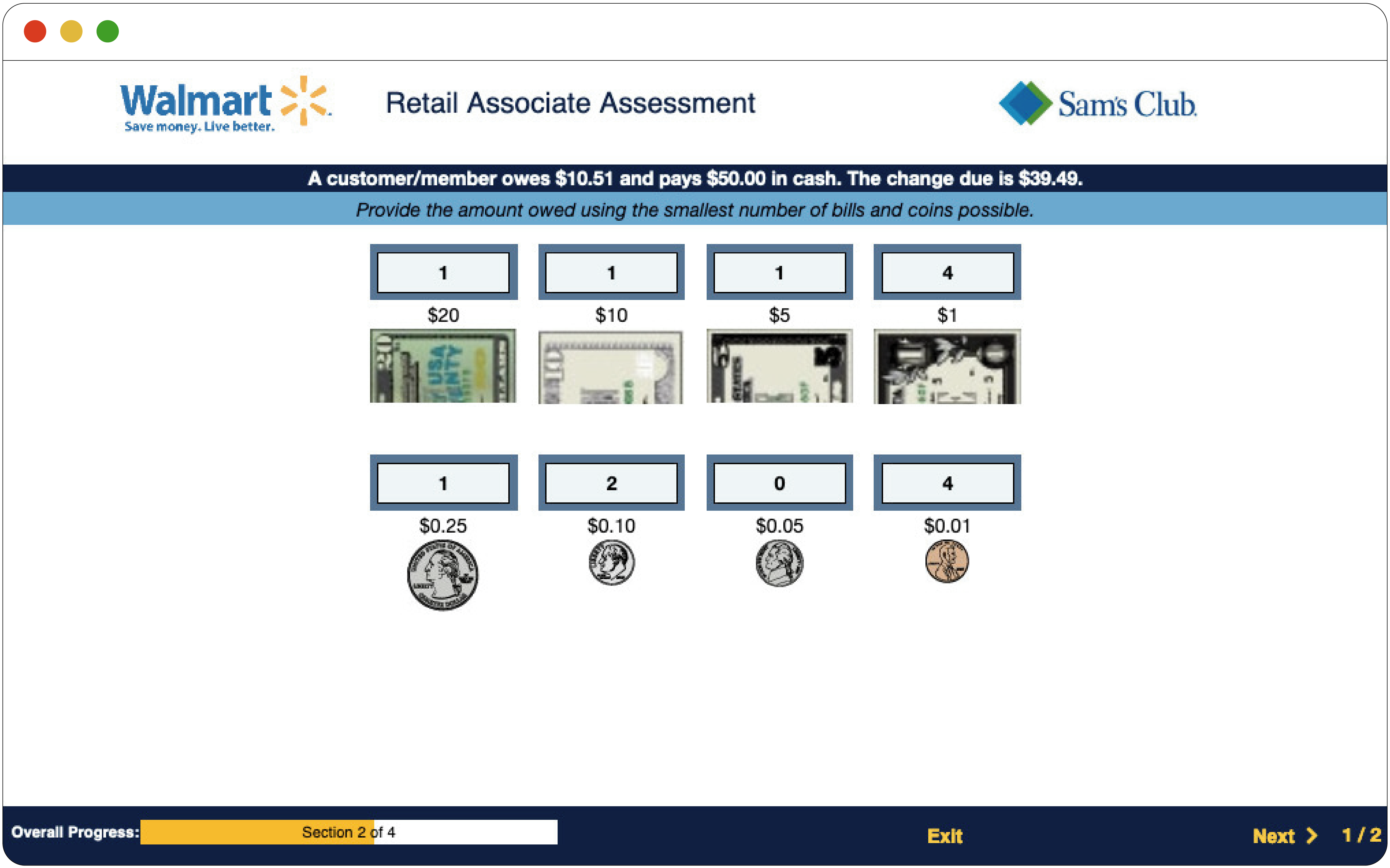

Four employers — Amazon, CVS, Home Depot, and Walmart — administered a range of simulation tests, asking us to perform tasks or work activities that mirror the tasks employees perform on the job. For example, Walmart asked us to count bills and change. (Before beginning the test, we were told we could “have a calculator ready” if we’d like, and to “work quickly and accurately; the less time it takes you, the better.”) Home Depot administered a “code” matching exercise, which asked us to select a matching string of letters and numbers from a series of options. CVS asked us to check whether the price, amount, product number, and shelf location of a product were correct. Amazon presented a more complex simulation called “Stow Pro,” which asked us to store packages on a shelf according to their color and weight.

Figure 4: Walmart Skills Test

This figure shows a question from Walmart’s change counting skills test. It asks “A customer/member owes $10.51 and pays $50.00 in cash. The change due is $39.49. Provide the amount owed using the smallest number of bills and coins possible.” The candidate is presented with coins and bills up to $20, and can indicate the number that they would return to the customer.

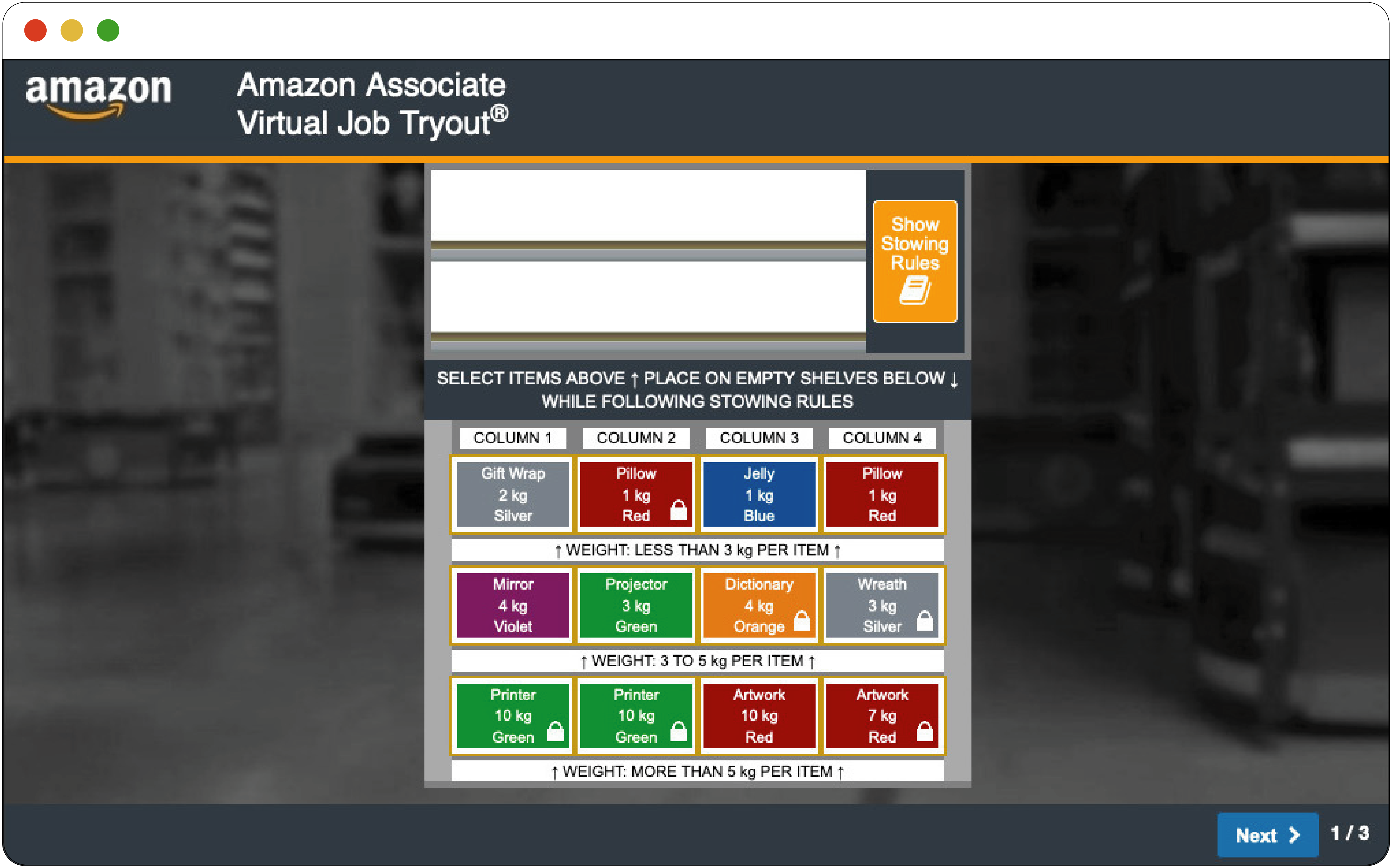

Figure 5: Amazon Skills Test

This figure shows an excerpt from Amazon’s “Stow Pro” assessment. It depicts three rows of “packages” of varying colors, with weights and items listed on them (e.g., “Projector; 3kg; Red.” The directions read “Select items above, place on empty shelves below, while following the stowing rules.” There is an orange icon titled “Stowing Rules” where candidates can access the rules to follow for the activity.

Three employers administered a multiple-choice assessment, focusing on work experience, attendance, how often and why we left previous jobs, and how a previous supervisor might rate multiple aspects of our performance.

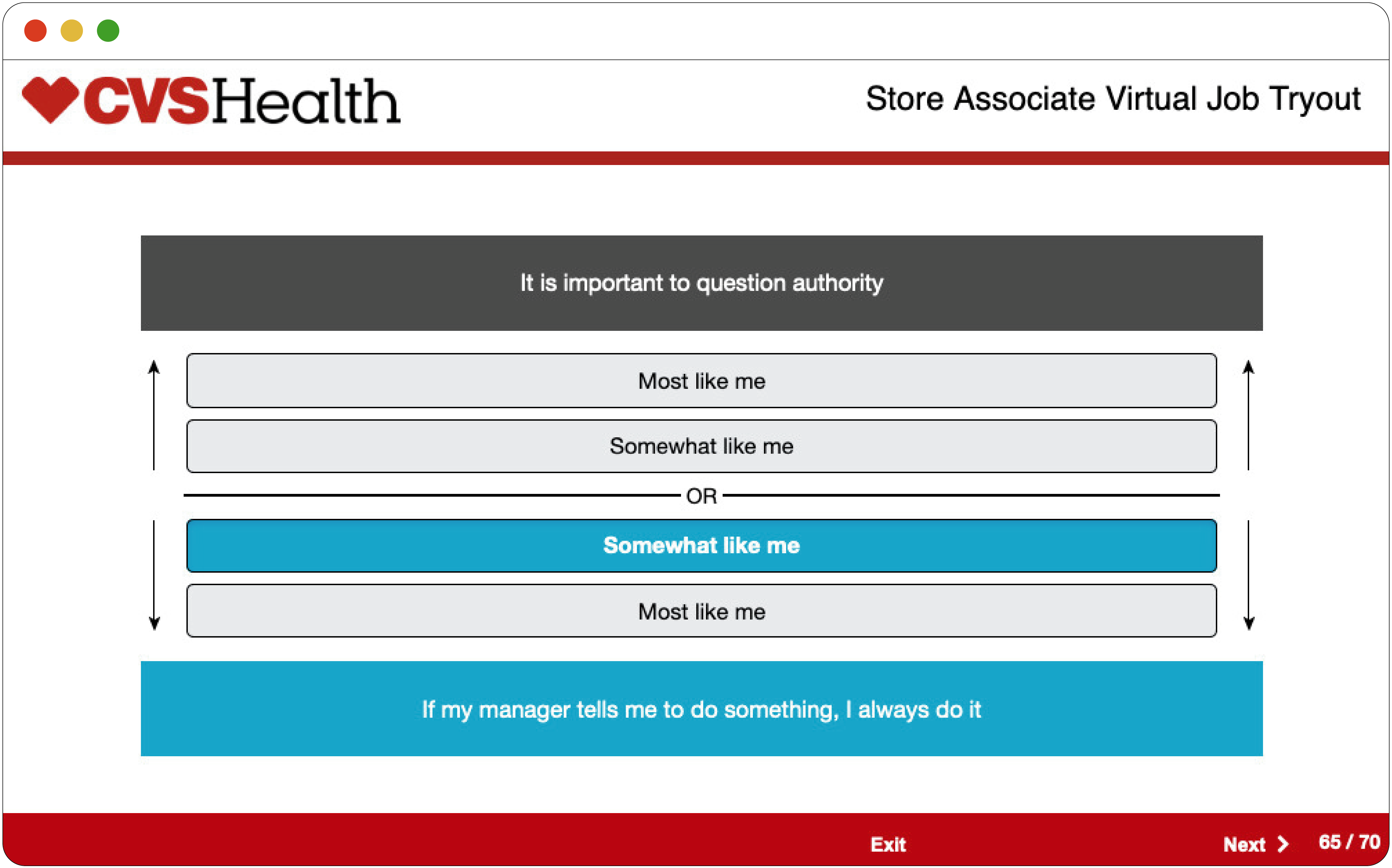

Finally, five employers administered personality tests, which appeared to try to measure our motivations, preferences, interests, and style of interacting with people and situations. Walmart and CVS both issued assessments titled “Describe Your Approach,” which had identical instructions and slightly varied questions. In this test, we were asked to compare two contrasting statements, and identify which we thought was “most like” us. The statements were sometimes mutually exclusive. Other times, both statements might be true and we were forced to choose between them.

Figure 6: CVS Personality Test

This figure shows a question from CVS’s personality tests that asks candidates to select between two statements. The candidate must select which statement is “most like” or “somewhat like” them.

| Company | Statement |

|---|---|

| CVS | It is important to question authority / If my manager tells me to do something, I always do it |

| Walmart | Work doesn’t have to be perfect the first time around / Work should be as perfect as possible the first time around |

| Walmart | I can handle whatever comes my way / Some obstacles are too great to overcome |

| Walmart | At times, I have been rude to others / I am never rude to other people |

| CVS | I warm up quickly to others / Sometimes it takes me awhile to warm up to others |

| Walmart | Most people can be trusted / It is smart to question people’s intentions |

| Walmart | I perform best when I am very busy at work / I perform best when my workload is reasonable |

Statements from the “Describe Your Approach” personality test (from Walmart and CVS)

In addition to “Describe Your Approach”, CVS administered a 28-question assessment titled “What Drives You” that purported to learn “what work environment motivates and inspires” candidates. This test requires candidates to select one of two statements on the screen (e.g., “I am held accountable to store outcomes” or “I can have a stable, secure lifestyle.”)

Amazon, Home Depot, and Walgreens administered similar tests. Amazon provided individual statements like “I am organized” or “It is important to appreciate each day” and had us rate our agreement from Strongly Disagree to Strongly Agree. Home Depot asked us whether we would “feel less bothered about leaving litter in a dirty park than in a clean one” and whether we see ourselves as “talkative” or “sophisticated in art, music, or literature.”

F. Interviews

After submitting an application, candidates may be asked to schedule an interview with the employer. In our experiments, we were directed to schedule a next step beyond our online application at Amazon, Walgreens, Kroger, and McDonald’s (other employers either rejected us or never followed up). Each of these employers asked for an in-person meeting (these applications were submitted pre-pandemic). Amazon stated that the in-person appointment was not an interview, but for the candidate to “learn more about the job and complete new hire requirements.” We did not proceed with any interviews or appointments beyond our initial online applications.

Concerns and Critiques

In the previous section, we summarized application processes from an applicant’s perspective. In this section, we offer a more critical analysis, drawing from external research, including white papers, vendor marketing materials, policy reports, and expert interviews.

A. Background checks, which can be illegitimate and discriminatory barriers to employment, are easier than ever for employers to adopt.

For several of the applications we submitted, we consented to a background investigation as a condition of submitting the application. Most employers we examined used an ATS capable of integrating with a range of background screening vendors, including social media screens, criminal background checks, credit checks, drug and health screenings, and I-9 and E-Verify checks. However, we were unable to observe which, if any, of these checks were actually administered.

Background checks can be a significant barrier to employment for those who need it most. They often include credit reports and criminal records, both of which reflect racial discrimination in the financial and criminal legal systems. Even the mere request for consent to conduct a background check can dissuade applicants with criminal records or poor credit histories from submitting an application. Background check reports are also notoriously plagued with errors, such as records matched to the wrong person and inaccurate case dispositions. Employers may conduct criminal background checks due to generalized safety concerns, but such concerns are unsupported and are outweighed by the critical source of safety that an income provides.

Recognizing these concerns, several states have enacted legislation to prohibit or limit pre-employment criminal background and credit checks, and some states have limited employers’ use of E-Verify to check immigration information. The Equal Employment Opportunity Commission (EEOC)’s criminal records guidance acknowledges that “national data supports a finding that criminal record exclusions have a disparate impact based on race and national origin[,]” providing “a basis for the Commission to investigate [] disparate impact charges…..”

B. Some applications made it difficult for us to convey relevant skills and work history.

In each application we submitted, we were asked to convey work experience and relevant skills, either by filling out a form or uploading a resume. Some of the forms we encountered provided a wide range of predefined responses, many of which appeared entirely subjective, or unrelated to the job for which we were applying. For example, FedEx asked us to select up to 50 skills we possessed from a list, including “Commanding influence,” “Business Appreciation,” “Appreciation of Business Context,” and “Genuinely caring.” It is difficult to imagine any applicant feeling confident navigating these choices.

Automatic resume parsing presents its own challenges. First, the natural language processing (NLP) underlying resume parsers can simply fail, depending on a document’s type and formatting. As evidence of this fact, there are many online resources for applicants from ATS vendors themselves on how to ensure their resumes are parsed correctly. Second, researchers have found that NLP systems trained on real-world data can quickly absorb society’s racial and gender biases. For example, one study found that NLP tools learned to associate common African-American names with negative sentiments, while female names were more likely to be associated with domestic work than professional or technical occupations. Tools that rely on NLP could therefore reflect “expected” linguistic patterns and, as such, could misunderstand, penalize, or even unfairly screen out candidates whose language usage deviates from what is expected.

C. Employers offered us little to no feedback during the application process.

In most of the application processes, we received no meaningful feedback from employers. For example, we never received any indication of whether our qualifications were suitable for the job, nor information about the result of any pre-employment test except for Walmart (we were told we failed the assessments, but not which one). Although it is feasible for employers to offer this kind of feedback through their ATS systems, we assume employers don’t want to provide feedback for a variety of reasons, including fear of liability and accountability for judgments or assumptions made during the application process.

However, this opacity has a range of serious consequences for applicants. For example, when an employer doesn’t show an applicant available shift times, the applicant is forced to guess about the employer’s current needs, and may feel pressure to exaggerate their own availability. When an employer doesn’t tell an applicant how they performed on a simulation test, the applicant will not know if they need to practice particular skills before reapplying. Applicants have little ability to challenge unfair or inaccurate personality tests when employers withhold the results.

D. Employers provide few upfront details about accommodations available for applicants with disabilities.

In many applications, accommodation notices were difficult to find, unclear, or incomplete. For example, Walmart bundled its initial reasonable accommodation notices into the same terms and conditions fine print as consenting to drug tests, background screenings, and enrolling in automated mobile text recruiting programs. Similarly, CVS asked applicants to contact its “Advice and Counsel Reasonable Accommodations team” without providing any contact information, while also noting that CVS would only accept applications for employment via its website. Three employers provided information about reasonable accommodation directly preceding assessments, but none provided information about what the assessments would entail or what kinds of accommodation they might provide.

The Center for Democracy and Technology (CDT) has argued that simply notifying candidates that they may request accommodations is insufficient. Instead, employers should notify candidates of “how a hiring tool works, so that candidates can understand when they may need a reasonable accommodation.” If candidates do not know what an assessment does and what accommodations are available to them, it will not be clear when they can and should request accommodations. CDT concluded that “it is highly preferable for employers and vendors to develop universally accessible testing practices that do not put applicants in this position.”

E. Employers’ personality tests did not clearly measure essential functions for the jobs to which we applied, and might discriminate against some applicants.

As described above, in some applications, we answered a range of personality test questions. These raise several concerns. First, it is not clear whether these tests effectively measure essential functions for the job. Second, they may have discriminatory effects against protected groups, especially people with disabilities. Finally, they may contribute to union-avoidance strategies.

Many of the personality test questions we encountered had little apparent relationship to the jobs for which we were applying. Meta analyses show personality tests have relatively low correlations with job performance, compared to other kinds of assessments. Psychometric tests are highly sensitive to environment and small variations in instructions or question type, casting doubt on their reliability as an indicator of job performance. Personality and integrity tests can also yield inconsistent results when administered to the same person multiple times.

Personality tests are often “normed” around a largely “white, middle-class population,” which could contribute to discrimination against other groups. Norming is the process by which numerical test scores are given qualitative meaning. The population around which a test is normed will be set to a distribution, with the most common scores constituting what is most “normal.” If the population is homogenous and not representative of those the test will ultimately be administered to, the test will likely not be accurate for individuals who do not fit the original population’s characteristics. The test may be biased against those who do not fit a certain cultural, class, religious, able-bodied, neurotypical or racial “norm.” For example, on the popular Minnesota Multiphasic Personality Inventory (MMPI), “members of minority and ethnic religious groups have tended to look disproportionately pathological.” Although the MMPI is not widely used as a hiring tool, the same issues may arise in other personality tests.

Personality tests could also uniquely discriminate against people with disabilities. In 2012, Roland Behm filed charges with the EEOC against Kroger and several other hourly employers, alleging that their personality tests discriminated against his son, Kyle Behm, on the basis of his bipolar disorder. Kyle Behm had applied to an hourly position at a Kroger pharmacy and was informed by a friend that he was denied specifically on the basis of his personality test results. Despite having worked successfully in customer service, he was told the results showed he would not work well with customers if he was upset or angry. Kyle told the Wall Street Journal he’d never had these issues in previous jobs, and that the employers “would’ve known that if they contacted any of my references.” No public action was taken by the EEOC.

Finally, the history of pre-employment personality tests is partially rooted in efforts to prevent unionization. A 1979 Congressional hearing and subsequent report surfaced “ . . . considerable evidence concerning the screening of applicants who might be ‘susceptible to unionization[,]’” including statements from consultants describing how screening out candidates who were “frustrated,” “prestige seeker[s],” or had a “keen awareness of personal rights” could help employers avoid unionization. The report also documented the racist dimensions of union prevention, noting that some consultants counseled employers to view Black and Indigenous applicants and applicants of color as “more prone to unionization than whites.” The National Labor Relations Act forbids employers from refusing to hire applicants because they are pro-union, but it may be difficult to allege or prove anti-union discrimination from an inscrutable personality test.

Some personality test questions we saw may be part of such union-avoidance strategies. Employers asked applicants if they “question authority,” or prioritize their well-being over performance at the job. Other questions seemed to gauge our willingness to conform to work for low pay — for example, one question asked if we prefer a job where “there are high performance expectations” or where we are “highly compensated for [our] work.” Such questions may help screen out candidates who will agitate for better working conditions or join a union.

F. Employers who broadly screen out candidates based on their availability or pay preferences are likely rejecting qualified candidates.

For almost every hourly job to which we applied, we were asked for our availability without any insight into an employer’s needs for a particular shift — only two employers we applied to provided available shift times. This practice can disfavor people (disproportionately women) who care for children or other dependents or candidates with any other commitments — like school or another job. Knowing which shifts employers need filled could help applicants to gauge whether their availability will meet employers’ needs, provide accurate answers, and manage their other commitments. If employers do not state their shift needs, and reject or penalize candidates that do not have wide availability, they may disproportionately screen out women and reject qualified candidates. Additionally, when candidates are incentivized to provide wide availability, they may be subjected to unpredictable work hours. Such practices around availability and scheduling may be of interest to unions, as a common complaint among hourly workers is inconsistent scheduling and even inconsistent weekly hours resulting in unpredictable pay.

Asking candidates for their preferred pay rate presents similar problems. Three applications asked candidates to provide their desired pay without providing information on what a typical pay rate is. This might encourage candidates to write in lower pay while employers automatically reject those who ask for more, allowing employers to leverage information and power asymmetries to create a potentially exploitative dynamic. Candidates who ask for higher pay than what the employer expects may still be willing to work for lower pay, and employers who reject those candidates outright are very likely rejecting qualified candidates.

G. Employers can use software to automatically prioritize and rank applicants without any transparency.

We were not able to see how each employer ultimately analyzed the data it gathered from job seekers. We do know, however, that employers can use a variety of algorithmic tools, made available by a range of vendors, to help automate final hiring decisions. For example, Taleo advertises a “Req Rank,” tool that automatically scores applications and resumes based on their content by comparing it to the job description. IBM’s BrassRing ATS can create a single “overall score” if a candidate has taken multiple assessments throughout a hiring process. Sometimes, ATS vendors will work with employers to configure overall thresholds. However, in most cases, the contracts between employers and vendors place final responsibility for configuration with the employer.

During our interviews, multiple vendors expressed concern that employers might misconfigure these higher-level analytic tools. There is also evidence that prioritizing certain inputs to an application, like an applicant’s physical proximity to a workplace, can disproportionately impact people living in economically disadvantaged areas. But today, neither applicants nor researchers have any way of knowing how employers are making decisions behind the scenes.

Civil Rights Law Overview

This section offers a brief overview of relevant civil rights law in the United States. In the next section, we apply this law to the hiring processes discussed above, and highlight gaps.

Under federal and state civil rights laws, employers may not screen out applicants on the basis of a protected class. Title VII prohibits discrimination on the basis of race, color, religion, sex, or national origin; the Americans with Disabilities Act (ADA) prohibits discrimination on the basis of disability; and the Age Discrimination in Employment Act (ADEA) prohibits discrimination based on age. All three laws prohibit intentional discrimination (“disparate treatment”) and facially neutral practices that have a discriminatory effect (“disparate impact”). State and local laws generally extend the same protections, although some jurisdictions add protected classes, such as income or political affiliation.

A. Title VII and the Uniform Guidelines on Employee Selection Procedures

Under Title VII, an employment practice that causes a disparate impact is unlawful unless the employer can demonstrate that the practice is “job related for the position in question and consistent with business necessity."42 U.S.C. § 2000e–2(k)(1)(A)(i). Practices that are sufficiently job related may still be unlawful if the complaining party demonstrates that there is a less discriminatory alternative and the employer has refused to adopt it.

The EEOC, which enforces Title VII, adopted the Uniform Guidelines on Employee Selection Procedures (UGESP) in 1978 to provide guidance to employers on the application of Title VII to hiring procedures, including how to determine whether selection procedures have a disparate impact on the basis of race, ethnicity, sex, or gender, and whether the procedures are job related. The UGESP do not apply to discrimination based on other characteristics such as age or disability, or intersectional discrimination. While the UGESP do not directly bind most private sector employers, they are viewed by courts as influential guidance for applying Title VII, and they are codified as binding regulations for federal contractors.

The UGESP gives employers a benchmark for determining whether a hiring process is likely to have a disparate impact: the “four-fifths rule.” According to this standard, federal enforcement agencies will generally not take action against an employer for disparate impact if the employer’s hiring rate for a protected group is at least 80% that of the group with the highest selection rate. This standard is commonly cited by industry and research communities, both within and outside of the employment context. However, agency guidance has repeatedly cautioned that the four-fifths standard is not intended to be a final determination of disparate impact, and that enforcement agencies may find disparate impact based on other indicators. Courts deciding Title VII cases have declined to adopt the four-fifths standard — or any arithmetic benchmark — as a singular definition of disparate impact.

Selection procedures that have a disparate impact can still be legally justified if the employer can show that they are sufficiently “job related.” For determining whether a selection procedure is job related, the UGESP adapted the concept of “validity” from psychology. The current version of the American Psychological Association’s Standards for Educational and Psychological Testing (“APA Standards”) defines validity as “the degree to which evidence and theory support the interpretations of test scores for proposed uses of tests.”

The UGESP presents three different types of validity as “independent path[s] through which an employer can establish the job relatedness of a selection procedure . . . .” (1) Content validity means “the content of the selection procedure is representative of important aspects of performance on the job . . .”; (2) construct validity means that the selection procedure “measures the degree to which candidates have identifiable characteristics which have been determined to be important in successful performance in the job . . .”; and (3) criterion validity means “the selection procedure is predictive of or significantly correlated with important elements of job performance[,]” based on empirical evidence. Enforcement agencies will only request validity evidence from employers if their hiring processes are under investigation for disparate impact.

The UGESP advises that, “whenever a validity study is called for” (i.e., when an adverse impact is detected), the study should include “an investigation of suitable alternative selection procedures…which have as little adverse impact as possible….”

B. The Americans with Disabilities Act

The ADA prohibits hiring practices that screen out or tend to screen out qualified applicants on the basis of disability. A test or criterion that screens out applicants based on disability can only be justified if it measures applicants’ ability to perform the essential functions of the particular job. If the criterion in question is an essential job function, the employer still must not use it to screen out disabled applicants if they could perform the essential job functions with a reasonable accommodation. For example, EEOC guidance provides the following hypothetical:

An employer has a job opening for an administrative assistant. The essential functions of the job are administrative and organizational. Some occasional typing has been part of the job, but other clerical staff are available who can perform this marginal job function. There are two job applicants. One has a disability that makes typing very difficult, the other has no disability and can type. The employer may not refuse to hire the first applicant because of her inability to type, but must base a job decision on the relative ability of each applicant to perform the essential administrative and organizational job functions, with or without accommodation.

Employers must provide reasonable accommodations to make the application process accessible to people with disabilities. If a hiring test is not accessible to a disabled candidate, the employer may need to design an alternative method to evaluate the candidate’s abilities to perform the essential job functions. EEOC guidance advises that “[a] test will most likely be an accurate predictor of the job performance of a person with a disability when it most directly or closely measures actual skills and ability needed to do a job.”

C. Executive Order 11246 and the Office of Federal Contract Compliance Programs

Most employers that have contracts with the federal government are subject to a particular set of civil rights obligations, which apply to all of the employers’ hiring, not just to hiring for a federal contract. That means federal contractor obligations reach a broad swath of non-federal hiring by many large employers.

In addition to following Title VII and the ADA’s antidiscrimination standards, federal contractors also must “take affirmative action to ensure that applicants are employed . . . without regard to their race, color, religion, sex, sexual orientation, gender identity, or national origin,” and “to employ and advance in employment qualified individuals with disabilities.” To meet these requirements, employers must track the gender, race, ethnicity, and disability status (based on voluntary self-identification) of each applicant and employee. The UGESP require federal contractors to maintain data showing the impacts of their hiring processes on race, ethnicity, and gender groups, and the Office of Federal Contract Compliance Programs (OFCCP)’s regulations governing affirmative action for disabled workers require contractors to track the percentage of employees who identify as disabled. Employers must also maintain a written Affirmative Action Plan (AAP) that sets and reports on progress toward diversity goals.

Each year, the OFCCP selects several thousand employers for a routine audit. Employers must send their written AAPs to the OFCCP, along with other supporting documentation. The exact nature of the supporting documentation can vary depending on the year; in 2020, it included the number of applicants and hires for each job category, identified by gender and race/ethnicity. If OFCCP finds evidence of adverse impacts, such as a selection rate that fails the four-fifths standard or failure to meet affirmative action goals, the OFCCP may move onto an “on-site audit,” during which it has wide discretion to review all aspects of employers’ hiring practices and check for discrimination. When the OFCCP finds violations, it may enter into a conciliation process with the contractor.

Legal Gaps and Limitations

This section highlights key gaps and limitations in the federal civil rights enforcement regime in light of our research.

A. The EEOC’s oversight is largely reactive, leaving many hiring selection procedures unexamined.

The EEOC’s enforcement of Title VII and the ADA largely relies on individuals to file charges of discrimination. Once a charge has been filed, the Commission has broad power to subpoena relevant evidence. However, this investigatory power does not exist before a charge has been filed, so the Commission has limited access to information that could help it decide where to pursue enforcement. The Commission’s reliance on individual complaints is troublesome because, as the Commission has acknowledged, “victims typically lack information about a discriminatory hiring policy or practice.” Moreover, it is often not in an applicant’s best interest to file a charge against an employer they wish to work for.

In the absence of a complaint, the EEOC can initiate its own “commissioner charge.” Commissioner charges have been critical for addressing systemic discrimination, but they usually stem from investigations into complaints filed by individuals. For example, while investigating individual ADA complaints of discriminatory attendance policies at some Verizon affiliates, the EEOC discovered that the same policy was in effect at all Verizon affiliates, and filed a commissioner charge naming all of them.

B. The OFCCP’s audits depend on employer compliance, and may not be detailed enough.

By contrast, the OFCCP’s enforcement regime for federal contractors is structured around proactive reporting and oversight. The office audits thousands of employers’ affirmative action plans every year, regardless of whether it receives complaints. The employers’ contracts with the federal government, as well as their affirmative action obligations, help explain the OFCCP’s more proactive posture. For example, federal contractors are supposed to track their annual progress toward a goal of having 7% of their workforce be people with disabilities. If a contractor fails to make progress and doesn’t provide a satisfactory analysis of improvements it can make, the OFCCP may conduct an on-site review to investigate why the employer is falling short. Federal contractors also face stricter requirements to collect and retain applicant data, providing more documentation for the OFCCP to review.

While the OFCCP appears to engage in more proactive oversight than the EEOC, there are still barriers to surfacing potential discrimination in federal contractors’ hiring processes. The hiring data that the OFCCP receives during a desk audit (the first auditing phase) is limited to overall hiring data for each job group. Desk audits are not designed to surface information about the selection devices and technologies employers are using and what they purport to measure. The OFCCP can access this information during an on-site audit, but it will only move onto this step if the employer’s documentation is incomplete or points toward potential discrimination.

A recent OFCCP report revealed that employers often fail to maintain required documentation, which could frustrate oversight efforts. The report summarized the office’s 2020 auditing activity under Section 503 of the Rehabilitation Act of 1973, which protects people with disabilities in federal employment. According to the report, the most common violations the OFCCP found during its audits included “[f]ailure to invite applicants and employees to self-identify as an individual with a disability” and failure to document the number of applicants and hires identifying as people with disabilities. Despite these violations, the report stated that “ . . . OFCCP ha[d] not found any discrimination or reasonable accommodation violations in the focused reviews.”

C. Federal regulators produce too little public precedent and research.

When the EEOC or OFCCP finds evidence of discrimination or other violations, they often attempt to resolve them through confidential conciliation-oriented processes. The EEOC does not release its charges, findings, or conciliation agreements (the OFCCP has made some conciliation agreements public). Title VII forbids the EEOC from making public its charges or anything said or done during its settlements with employers. Unlike other agencies, such as the FTC, there is no public body of EEOC enforcement precedent that can be used to set forward-looking best practices.

Title VII gives the EEOC the authority to “make such technical studies as are appropriate to effectuate the purpose and policies of [Title VII] and to make the results of such studies available to the public.” However, the Commission recently expressed doubt that it could use this authority to obtain non-public information about hiring practices as a practical matter.

The Commission has provided very little public information about its knowledge of or activities around hiring technologies. In 2016, the Commission held a meeting on the implications of big data for equal employment opportunity; however, it released no reports or guidance following the meeting. The same year, in a report on the Commission’s systemic enforcement, Chair Jenny R. Yang acknowledged the need for the Commission to do more research:

[I]t is important for EEOC to consider additional sources of information in identifying issues where government enforcement is most needed. . . . For example, EEOC's Research and Data Plan identifies the need for research on screening devices, tests, and other practices to identify barriers to opportunity as well as promising selection practices that rely on job-related criteria. Such research will help the agency focus its enforcement efforts in ensuring that selection practices are related to performance of the job.

D. The UGESP defines “job relatedness” too permissively, and does not reflect modern social science standards.

Under Title VII, hiring practices with a disparate impact can be justified if they are sufficiently “job related.” However, “job relatedness” is a more permissive standard than the term implies. The UGESP gives employers broad discretion to design their own validity studies or borrow from existing ones. For example, a common method of establishing validity is to show that a test score or criterion is associated with better performance reviews from supervisors (which are themselves subject to bias). Many vendors also claim that their selection procedures have “generalized validity” for a range of jobs and skills even though repurposing assessments for different work environments can damage their reliability in predicting job performance, especially if the skill being assessed is not essential to the job in question.

The UGESP, especially as interpreted by some courts, has made it far too easy for employers to defend their use of discriminatory selection devices. For example, in 2016, the U.S. Court of Appeals for the First Circuit found that a test for promoting police officers was lawful, despite having a large disparate impact on Black and Hispanic applicants, because an industrial organizational psychologist testified that the test was “minimally valid” (the psychologist found that about 80% of the test was invalid). As Derrick A. Bell, Jr. observed, “[I]f the country was really committed to eradicating the social and economic burdens borne by the victims of employment discrimination, it would have fashioned a far more efficacious means of accomplishing this result.”

The UGESP’s definition validity is out of step with the social science standards it purports to reflect. The APA standards do not justify the UGESP’s view of validity as an independent defense against evidence of discrimination. To the contrary, modern social science views fairness as an important part of the inquiry into whether a test is “valid.” For example, the APA standards advise that tests should be examined for “differential validity” — whether the test overpredicts or underpredicts job performance for a certain subgroup — and that such bias can directly undermine validity. The APA standards have also moved away from the UGESP’s “trichotomous separation of test validity into content, criterion, and construct validity[,]” and instead treat validity as “a unitary concept with different sources of evidence contributing to an understanding of the inferences that can be drawn from a selection procedure.”

E. Employers have few legal incentives to investigate alternative, less discriminatory selection procedures.

In practice, most employers face too little pressure from enforcers to meaningfully evaluate their selection devices and consider less discriminatory alternatives. One reason for this is known as the “bottom line” standard. The UGESP advises that “the Federal enforcement agencies . . . will not expect [an employer] to evaluate the individual components” of its hiring process (such as tests, resume screening, or interviews) for discrimination or validity, as long as the employer’s overall hiring process does not have an adverse impact. If an employer’s overall hiring process passes the four-fifths standard, it has little incentive to analyze whether individual hiring tests are disproportionately screening out protected groups.

Employers also are not incentivized to compare alternative selection procedures to find the least discriminatory means of accomplishing their hiring goals. Under Title VII, hiring practices that have a disparate impact — even those justified as “job related” — are unlawful if the employer could have used a less discriminatory alternative. However, in practice, this standard manifests as a burden on the plaintiff in litigation. After an employer has successfully defended a discriminatory hiring practice as “job related,” the plaintiff can present evidence that the employer could have used a less discriminatory alternative. However, very few cases have ever been decided on the basis of a less discriminatory alternative. In a recent interview, an OFCCP official resisted even the implication that employers have any obligation to proactively consider less discriminatory alternatives, and warned that doing so could lead to legal liability.

In some cases, employers who try to correct for discriminatory hiring assessment results may face liability for disparate treatment. In Ricci v. DeStefano, the Supreme Court held that the City of New Haven intentionally discriminated when it invalidated the results of a firefighter promotion test that had a disparate impact on Black and Hispanic firefighters. Ricci created a presumption that throwing out a test that has already been administered, to correct for a racial disparity in its results, constitutes unlawful disparate treatment. However, the Ricci Court also held that “Title VII does not prohibit an employer from considering, before administering a test or practice, how to design that test or practice in order to provide a fair opportunity for all individuals, regardless of their race.” As Scherer et al. observed, Ricci has not limited employers’ ability to design and test selection procedures to minimize disparate impacts before finalizing their hiring processes.

F. Standards for addressing disability discrimination are underdeveloped.

The EEOC’s guidance for complying with the ADA focuses largely on the need to provide reasonable accommodations, a responsibility that it says “ . . . generally will be triggered by a request from an individual with a disability, who frequently can suggest an appropriate accommodation.” The Commission provides little guidance on designing selection procedures to avoid disability discrimination in the first place. This approach situates the burden of avoiding discrimination with disabled job applicants, who may not have enough information about the employer’s hiring assessments and what they’re attempting to measure.

The UGESP does not address disability discrimination — the main source of guidance on assessing discrimination in selection procedures — and it cannot simply be shoehorned into the UGESP’s current approach, which relies on the four-fifths standard. As Alexandra Reeve Givens has explained, because of the diverse ways in which disabilities manifest, statistical audits are generally not an effective means of assessing disability discrimination. While federal contractors must give all applicants an opportunity to self-identify as having a disability and track their rates of hiring people with disabilities, such measures are voluntary for private-sector employers.

(“Charges shall not be made public by the Commission . . . . the Commission shall endeavor to eliminate any such alleged unlawful employment practice by informal methods of conference, conciliation, and persuasion. Nothing said or done during and as a part of such informal endeavors may be made public by the Commission, its officers or employees, or used as evidence in a subsequent proceeding without the written consent of the persons concerned”).

Recommendations

This section offers recommendations, incorporating the preceding empirical and legal analyses.

A. Regulators must be more proactive, and modernize standards for assessing the discriminatory effects of hiring selection procedures.

Our research shows that there are hard limits to what any applicant, researcher, or advocate can know about employers’ use of hiring technologies. As discussed in Section VI.A, the EEOC’s largely reactive oversight means that, as a practical matter, many hiring selection procedures are left unexamined. This leaves a major gap in legal accountability and contributes to a severe lack of overall transparency. In the short term, we urge the EEOC to increase its use of Commissioner charges and directed investigations, which are well-suited to tackling systemic discrimination. We also encourage the EEOC to creatively and aggressively use its statutory research authority to help develop a more detailed picture of how large employers are using hiring technologies today. Longer term, Congress may need to grant the EEOC new powers to ensure that it can provide effective oversight. In the meantime, state regulators may have more flexibility to take on a proactive role.

The OFCCP has an unique role to play in guarding against discrimination and ensuring equity in hiring. Its ability to proactively audit employers’ practices is likely to be increasingly important in the years to come. However, as discussed in Section VI.B, the OFCCP’s oversight could be more detailed and effective. In particular, the OFCCP should seek to take advantage of the more granular data generated by modern ATSs. The OFCCP may require new staff and technological capacities to make sense of more larger datasets. The OFCCP should also assess its ability to release more detailed, synthesized results from its routine audits available to the public, to contribute to development of research and enforcement priorities.

B. Employers should only attempt to assess the “essential functions” for a given job, and discontinue use of personality tests in the hiring process.

Our research shows that some employers are including personality tests in their online applications. Many of the questions we saw lacked any apparent connection to the essential functions of the jobs for which we applied, and they raised discrimination concerns, particularly with respect to disability and union organizing. By purporting to assess applicants’ personalities against some norm, personality tests reflect ableist assumptions about the type of person that makes a good job candidate. When deployed at scale, these tests can systematically lock people out of employment if they don’t fit the “norm.”

Today, the law tolerates hiring tests that are arbitrary or attenuated, as long as they don’t result in discrimination. However, neither employers nor federal regulators have developed adequate methods for detecting discrimination in hiring tests — especially for disability discrimination, which can take many different and subtle forms and eludes statistical testing. Existing validity guidance is permissive, and does little to prevent pervasive use of personality tests that have discriminatory effects.

There is a better way. EEOC guidance states that “[a hiring] test will most likely be an accurate predictor of the job performance of a person with a disability when it most directly or closely measures actual skills and ability needed to do a job." While this guidance only applies to an employer defending against evidence of disability discrimination, we believe that this “essential functions” standard should be applied to all pre-employment tests — at least for the entry-level jobs that people depend upon for their survival. For many jobs, an employer cannot hire all or even most of the qualified applicants. In these cases, some arbitrariness may be inevitable. But arbitrary measures should not risk systematically locking some groups of people out from entire sectors of employment.

C. Employers should give applicants more information throughout the application process, including about the purpose of selection procedures, the reasons for automated rejections, and details about reasonable accommodations.

Throughout this research, we observed a persistent and glaring information asymmetry between employers and applicants. Employers rarely inform applicants about the purpose of selection procedures, their performance on pre-employment tests, or reasons why their application was rejected. Despite the state of affairs, vendors told us that these kinds of feedback were easy to offer, even at scale. However, in practice, employers simply have few incentives to do so.

Applicants should be able to improve their skills, seek reasonable accommodations, and vindicate their legal rights. This can’t happen unless employers offer far more information throughout the application process. State and federal policymakers should consider ways to ensure this happens.

Conclusion

Our research shows that employers wield significant power and discretion through their online application processes. It is critical that regulators, employers, vendors, and others proactively assess hiring selection procedures to ensure that all applicants are treated fairly. Technology offers potential to help achieve these goals, but there is still much work yet to be done.

Acknowledgements

This research was funded by the Annie E. Casey Foundation. We thank them for their support. The findings and conclusions presented in this report are those of the authors alone, and do not necessarily reflect the opinions of the Foundation.

We are grateful for all those who provided valuable input and feedback as we worked on this report, including: Beth Avery, Senior Staff Attorney, National Employment Law Project; Gaylynn Burroughs, Senior Policy Counsel, The Leadership Conference on Civil and Human Rights; Sara Kassir, Senior Policy & Research Analyst, pymetrics; Lisa Kresge, Lead Researcher, Technology and Work Program, UC Berkeley Center for Labor Research and Education; Wilneida Negrón, Director of Policy and Research, Coworker; Aiha Nguyen, Program Director, Labor Futures, Data & Society; Frida Polli, Chief Executive Officer, pymetrics; Dariely Rodriguez, as Director of the Economic Justice Project, Lawyers’ Committee for Civil Rights Under Law; Matthew Scherer, Senior Policy Counsel for Worker Privacy, Center for Democracy and Technology; Ridhi Shetty, Policy Counsel, Privacy & Data Project, Center for Democracy and Technology; Ken Wang, Legislative Policy Associate, California Employment Lawyers Association; Jane Wu, Associate Partner, IBM Talent & Transformation; Jenny Yang, as nonresident fellow in the Center on Labor, Human Services, and Population at the Urban Institute, and many others not named here. The views expressed in this report are ours alone. We take full responsibility for any errors that remain here.

Thanks to Objectively for assisting with the design of our report.

Upturn is supported by the Ford Foundation, the Open Society Foundations, the John D. and Catherine T. MacArthur Foundation, Luminate, the Patrick J. McGovern Foundation, and Democracy Fund.

Appendix A: Artifacts

All artifacts from our research, including screenshots and proxy logs are available at:

https://drive.google.com/drive/u/0/folders/1byMg-ounTIc7fvXk6DZF-yw8gSMBMo1u.

| Employer | Artifacts |

|---|---|

| 7-Eleven | Screenshots Proxy Logs |

| Amazon | Screenshots Proxy Logs |

| Costco | Screenshots Proxy Logs |

| CVS Health | Screenshots Proxy Logs 1 Proxy Logs 2 |

| Fedex | Screenshots Proxy Logs |

| Home Depot | Screenshots Proxy Logs |

| Kroger | Screenshots Proxy Logs |

| Lowe’s | Screenshots Proxy Logs |

| McDonald’s | Screenshots Proxy Logs |

| Starbucks | Screenshots Proxy Logs |

| Target | Screenshots Proxy Logs |

| TJMaxx | Screenshots Proxy Logs |

| UPS | Screenshots 1 Screenshots 2 Proxy Logs 1 Proxy Logs 2 |

| Walgreens | Screenshots Proxy Logs |

| Walmart | Screenshots (Initial Application) Screenshots (Reapplication) Proxy Logs 1 Proxy Logs 2 |

Appendix B: Application Comparison

| 7-Eleven | Amazon | Costco | CVS Health | FedEx | Home Depot | Kroger | |

|---|---|---|---|---|---|---|---|

| Pre-employment test | • | • | • | ||||

| Eligible to work in the U.S. | • | • | • | • | • | • | |

| Candidate availability | • | • | • | • | • | ||

| Minimum age | • | • | • | • | • | • | • |

| H-1B | • | • | |||||

| Drug test | • | • | • | • | • | • | |

| Previous or current employee | • | • | • | • | • | • | |

| Other languages | • | ||||||

| Social security number | • | • | • | ||||

| Current student | • | ||||||

| English fluency | |||||||

| Highest level of education | • | ||||||

| Pay expectations | • | ||||||

| Dress code | |||||||

| Physical demands | |||||||

| Access to reliable transportation | • | ||||||

| Skills | • | ||||||

| Background check consent | • | • | • | ||||

| Reference check consent | • | • | • | • | |||

| Upload candidate resume | • | • | • | • | • | ||

| Work experience | • | • | • | • | • | • | |

| Voluntary Self-identification of Disability | • | • | • | ||||

| EEO-1 Voluntary Self Identification Form | • | • | • | • | • | • | • |

| Reasonable accommodation notice | • | • | • | • | • | ||

| Reasonable accommodation notice with clear contact information | • | • | • | • | |||

| Reasonable accommodation notice right before assessment | • | • | |||||

| Enroll in text and/or call recruiting | • | • | |||||

| Self-identify veteran status | • | • | • | • | • | • | • |

| Work Opportunity Tax Credit | • | • | • | • | |||

| Automatically consider candidate for other positions | • |

| Lowe’s | McDonald’s | Starbucks | Target | TJMaxx | UPS | Walgreens | Walmart | |

|---|---|---|---|---|---|---|---|---|

| Pre-employment test | • | • | ||||||

| Eligible to work in the U.S. | • | • | • | • | • | • | • | • |

| Candidate availability | • | • | • | • | • | • | • | • |

| Minimum age | • | • | • | • | • | • | • | • |

| H-1B | • | |||||||

| Drug test | • | • | • | • | • | |||

| Previous or current employee | • | • | • | • | • | • | ||

| Other languages | • | • | • | • | • | |||

| Social security number | • | |||||||

| Current student | • | • | • | • | ||||

| English fluency | • | |||||||

| Highest level of education | • | • | • | • | ||||

| Pay expectations | • | |||||||

| Dress code | • | • | ||||||

| Physical demands | • | • | ||||||

| Access to reliable transportation | • | |||||||

| Skills | • | • | ||||||

| Background check consent | • | • | • | |||||

| Reference check consent | • | • | ||||||

| Upload candidate resume | • | • | • | • | • | |||

| Work experience | • | • | • | • | • | • | • | • |

| Voluntary Self-identification of Disability | • | • | • | |||||

| EEO-1 Voluntary Self Identification Form | • | • | • | • | • | • | ||

| Reasonable accommodation notice | • | • | • | • | • | • | • | • |

| Reasonable accommodation notice with clear contact information | • | • | • | • | • | |||

| Reasonable accommodation notice right before assessment | • | |||||||

| Enroll in text and/or call recruiting | • | • | • | • | • | |||

| Self-identify veteran status | • | • | • | • | ||||

| Work Opportunity Tax Credit | • | • | • | • | • | • | ||

| Automatically consider candidate for other positions | • | • |

1

See Bureau of Labor Statistics, Characteristics of Minimum Wage Workers, 2018. https://www.bls.gov/opub/reports/minimum-wage/2018/pdf/home.pdf (“In 2018, 81.9 million workers age 16 and older in the United States were paid at hourly rates, representing 58.5 percent of all wage and salary workers.”).

1

See Bureau of Labor Statistics, Household Data Annual Averages, 2020, https://www.bls.gov/cps/cpsaat11b.pdf (showing, e.g., that the median age of fast food counter workers in 2020 was 22.4 and the median age of cashiers is 24.8).

1

See, Irene Tung, Yannet Lathrop & Paul Sonn, Nat’l Employment L. Project, The Growing Movement for $15 1, Nov. 2015, https://www.nelp.org/wp-content/uploads/Growing-Movement-for-15-Dollars.pdf (reporting that women, Black people, and Latinx people are overrepresented among workers who make less than $15/hour).

1

Workers with disabilities make less on average than those without, which is mainly attributed to the types of jobs they tend to hold — disabled workers make up a disproportionate percentage of service workers, cashiers, and retail salespeople. Jennifer Cheeseman Day & Danielle Taylor, U.S. Census Bureau, Do People with Disabilities Earn Equal Pay?, Mar. 21, 2019, https://www.census.gov/library/stories/2019/03/do-people-with-disabilities-earn-equal-pay.html; Bureau of Labor Statistics, Workers with Disabilities are more Concentrated in Service Occupations Than Those with No Disability, Oct. 10, 2017, https://www.bls.gov/opub/ted/2017/workers-with-a-disability-more-concentrated-in-service-occupations-than-those-with-no-disability.htm.

1

Public social media posts provide many examples of applicants who encounter bizarre, and seemingly arbitrary applications questions. See, e.g., caitrtot, TikTok, strangely unsettling corporate job application leading to an existential crisis check, https://www.tiktok.com/@caitrtot/video/6929661392458927366.

1

See, e.g., Marianne Bertrand & Sendhil Mullainathan, Are Emily and Greg More Employable Than Lakisha and Jamal? A Field Experiment on Labor Market Discrimination, 94 Am. Econ. Rev. 991, 2004.

1

See, e.g., William Erickson et al., Cornell U. Yang-Tan Inst. on Emp. & Disability, 2017 Disability Status Report: United States 31, 2019, https://www.disabilitystatistics.org/StatusReports/2017-PDF/2017-StatusReport_US.pdf; Alexis D. Henry et al., U. Mass. Med. Sch., Policy Opportunities for Promoting Employment for People with Psychiatric Disabilities 2, 2016, https://secureservercdn.net/198.71.233.254/d25.2ac.myftpupload.com/wp-content/uploads/2017/04/UMASS_Document_PolicyOppForPromEmployPPD_CHPR_2016_v5.pdf; Wendy Lu, This is How Employers Weed Out Disabled People From Their Hiring Pools, HuffPost, June 18, 2019, https://www.huffpost.com/entry/employers-disability-discrimination-job-listings_l_5d003523e4b011df123c640a.

1

Lincoln Quillian et al., Meta-Analysis of Field Experiments Shows No Change in Racial Discrimination in Hiring Over Time, 114 Proceedings of the National Academy of Sciences 10870, 2017, https://www.pnas.org/content/early/2017/09/11/1706255114.

1

See generally Miranda Bogen & Aaron Rieke, Upturn, Help Wanted, 2018, https://www.upturn.org/reports/2018/hiring-algorithms/.

1

Id. at 13.

1

See Id. at 13, 26–36.

1

Id. at 9.

1

See, e.g., Hilke Shellman, Auditors are Testing Hiring Algorithms for Bias, But There’s No Easy Fix, MIT Technology Review, Feb. 11, 2021, https://www.technologyreview.com/2021/02/11/1017955/auditors-testing-ai-hiring-algorithms-bias-big-questions-remain/; Alex C. Engler, Independent Auditors are Struggling to Hold AI Companies Accountable, Fast Company, Jan. 26, 2021, https://www.fastcompany.com/90597594/ai-algorithm-auditing-hirevue.

1

See, e.g., Leadership Conference on Civil & Human Rights, Civil Rights Principles for Hiring Assessment Technologies, Jul. 19, 2020, https://civilrights.org/resource/civil-rights-principles-for-hiring-assessment-technologies/.

1

See, e.g., The Future of Work: Protecting Workers’ Civil Rights in the Digital Age: Hearing before the Civ. Rts. & Human Services Subcomm. of the H. Educ. & Labor Comm., 116th Cong., Feb. 5, 2020, https://edlabor.house.gov/hearings/thefuture-of-work-protecting-workers-civil-rights-in-the-digital-age-; Sen. Michael Bennet (D–Co.), Press Release, Bennet, Colleagues Call on EEOC to Clarify Authority to Investigate Bias in AI-Driven Hiring Technologies, Dec. 8, 2020, https://www.bennet.senate.gov/public/index.cfm/press-releases?id=C464ADF2-FFB0-4895-92E2-92F8077E6DE9.

1

This is a simplified version of the Uniform Guideline on Employee Selection Procedures (UGESP), which defines “selection procedure” as “Any measure, combination of measures, or procedure used as a basis for any employment decision.” 29 C.F.R. § 1607.2(C).

1

The Bureau of Labor Statistics has nine size classes, with the largest class of companies being those with over 1,000 employees. Bureau of Labor Statistics, National Business Employment Dynamics Data by Firm Size Class, Jan. 27, 2021, https://www.bls.gov/bdm/bdmfirmsize.htm.

1

The employers we applied to were clustered in the retail trade, transportation and warehousing, and fast food restaurant industries as defined by the Bureau of Labor Statistics. Bureau of Labor Statistics, Industries by Supersector and NAICS Code, https://www.bls.gov/iag/tgs/iag_index_naics.htm. They all fall under 44-45 (retail trade), 48-49 (transportation and warehousing), and 722513 (fast food restaurants).

1

See, e.g., Jennifer Grill, How to Save Time with Pre-screening Questions!, ApplicantStack, Feb. 1, 2018, https://www.applicantstack.com/save-time-pre-screening-questions/.

1

See Jon Shields, Jobscan, Over 98% of Fortune 500 Companies Use Applicant Tracking Systems (ATS), June 20, 2018, https://www.jobscan.co/blog/fortune-500-use-applicant-tracking-systems/.

1

See Id.; Workable, Benefits of Applicant Tracking Systems, https://www.workable.com/guide/employer-benefits-of-applicant-tracking-systems.

1

See Ongig, Top Applicant Tracking Systems (ATS Software) in 2020, https://blog.ongig.com/applicant-tracking-system/top-applicant-tracking-systems-ats-software-2020/.

1

We identified the following ATSs during our study: IBM Kenexa BrassRing, Taleo, Workday, SuccessFactors, Cadient, and iCIMS.

1